Filling millions of orders in seconds

Do not fear the perfection because you will never reach it.

Youssoupha.

Introduction

The Matching Engine or ME as we use to abbreviate it internally, is one of core engine that makes HFT (High Frequency Trading) possible. It's the one responsible of executing orders of different market participants at … well light speed !

Accurate order matching is a critical component of an exchange. Investors, particularly active investors and day traders, will look for ways to minimize inefficiencies in trading from every possible source. A slow matching engine may cause buyers / sellers to execute trades at less-than-ideal prices, causing them to run into losses. At Bitwyre we understood that and that's why we're committed into offering you with a fair and fast matching engine.

This article is both developer friendly and newbie trader friendly. In the course of this article, we are going to shed some lights on the internal working of a matching order system, and we will eventually talk about some latencies and benchmarks of our own implementation of it.

What's the Matching Engine ?

Before electronic trading platforms, buyer and sellers were doing what is called open outcry. Open Outcry is a method of communication between people on a Stock exchange or futures exchange typically on a trading floor. It involves shouting and the use of hand signals to transfer information primarily about buy and sell orders. The part of the trading floor where this takes place is called a pit. Basically there people are yelling the prices and asset they want to buy or sell, and if there were a corresponding matching order, a trade will actually happen.

But as of today with the advent of Electronic Trading, people now place their orders from a computer and it's the system behind the platform they used which is responsible of doing the matching. That actual system behind the scene doing the matching is called the Matching Engine or the Order Matching System.

The stock market is form of Buyers and Sellers, they are continuously placing orders, and that set of orders is what constitute the orderbook.

The orderbook is usually splitted into two side books:

- The first sidebook for sell orders

- The second sidebook for buy orders

The matching engine task is usually to take as input an incoming order (which can be either buy or sell) and the corresponding side book from the order book.

According to the type of the incoming order, the opposite side book of the order book is chosen. That said if the incoming order is a buy order then the side book that will be chosen will be sell orders side book.

After consuming its inputs the matching engine will produce as output trades and resting orders. Resting orders are those orders that were not able to get match during the process. Those orders will form the next orderbook for subsequent matching requests..

Because a picture may be worth than thousand of words, the above illustration shows that process briefly.

Yeah nice illustration but man, how do you do to know which order will be matched first ? Is it black magic ?

Nice question this lead us to the next section different types of matching algorithms.

Different types of matching algorithm

The market is the place where makers and takers meet and decide about price changes. For this price changes to happen different orders must be matched together, and this match is not done randomly they're some set of rules which govern that.

Inside the market, orders are filled following different types of algorithms. We can denote :

- FIFO (First In First Out): In this algorithm orders are prioritized on a price-time basis

- FIFO with Pro-Rata: Orders are filled according to price, order lot size and time. An incoming order from a market participant is evenly split among matching counter orders proportionally to their size.

- FIFO Pro-Rata with top order: In this case, the oldest counter order is fully filled, and then a pro-rata allocation is performed with all the remaining counter orders.

For a more complete list you can check the CME website.

What you have to note is that the key difference between those algorithms resides in the way allocations are made.

The algorithm that will retain our attention for the rest of this article is … FIFO !

Inside the meander of FIFO

FIFO which stands for First In First Out is an allocation algorithm that prioritize orders based on their price and time.

Inside the Buy Book, the orders are sorted in such a way that those with higher prices are located at the top. if there are multiple orders having the same price, then they're ordered by their arrival time.

Inside the Sell Book, the orders are sorted in such a way that those with lower prices are located at the top. if there are multiple orders having the same price, then they're ordered by their arrival time.

The order with the highest price in the Buy Book is called the Best Bid and the order with the lowest price inside the Sell Book is called the Best Offer. The difference between those two prices form what is called the Spread

We can see here that apart from price, the time is a really impacting factor in matching process using FIFO. As a trader the more you hesitate to place your order the more you're losing positions inside the market, but anyway you have to chose your position wisely else you may go bankrupt in no seconds as the market is changing rapidly.

Note : In the following diagram

- Buy x@y means buy a specific asset for quantity x at y price

- Sell x@y means sell a specific asset for quantity x qt y price

Hmm it sounds funny till here but how do you really decide that two orders can be paired together ?

Generally, a buy order and a sell order are compatible if the maximum price of the buy order matches or exceeds the minimum price of the sell order. Translated into code it can gives us something like:

static auto canMatch(const Order& incomingOrder, const Order& inBookOrder) noexcept -> bool {

assert(incomingOrder.side() != inOrderBook.side() && "Other type must be different!");

if (incomingOrder.getSide() == Side::SELL) {

return incomingOrder.price() <= inOrderBook.price();

}

return incomingOrder.price() >= inOrderBook.price();

}

If we have inside our Sell Book a resting order with quantity 10 at price 101 (Sell 10@101), and then another market participant places a buy order with quantity 3 at price 100 (Buy 3@100) and we pass those two orders to our functioncanMatch(incomingOrder = 3@100, inBookOrder = 10@101) , we will see that it will return false because the seller is selling higher than what the buyer is ready to pay.

As a result of that function returning false, the incoming order will be inserted inside the orderbook (buy book) untill a corresponding sell order that matches arrives. Our order book state will become:

For a match scenario let's still consider the order book state from our previous illustration (After the insertion of Buy 3@100).

Another market participant just pop in and place a new request to buy 1 lot the security at 102 (Buy 1@102).

In this scenario its order will be matched with the best offer inside the buy book at that time, which is in this case is Sell 2@102.

Because the quantity the buyer is willing to take is less than what the seller is giving out, only the requested quantity will be removed from the counter matching sell order, and the remaining will stay inside the order book waiting kindly for another agressor order (Yes, patience is a virtue :) )

The in book order is said to be Partially Filled and the incoming order is said to be Fully Filled

When a match occurs, the order can be in either of the following statuses :

- Filled: means the whole quantity of the order was taken, thus the order is removed from the order book

- Partially Filled: means only a certain quantity was removed from the order, the order still remain at its position with the remaining quantity inside the order book.

This is the type of process that get repeated again and again inside the matching engine. Sometimes turning at the advantage of some participants, sometimes not.

With this glance coverage of the order matching system, I can assume that you can already roll out your own Implementation of it with an allocation algorithm of your choice ;)

I may dare to do so man, but the title of your article says filling millions of orders in seconds. I'm still wondering myth or reality?

Seems like I'm already tired, can we do that next time ?

You sold me a dream and then you run away?

Okay enough talking !

Bitwyre's Matching Engine Implementation

Our own implementation leverages Modern C++ (C++20 the latest standard available), it comes out with a lot of handy features that helped simplifying our code and achieving great performance.

From a technical perspective, the Matching Engine can be seen as a data structure that holds its elements sorted after every operations on it, given that it's either the buy book or the sell book.

The common operations that are perfomed inside the orderbook are :

- Insertion : when a market participant places a new bid/ask order

- Deletion/Cancellation: when a market participant decides to withdraw their order from the market.

With a suitable data structure that permits us fast insertion, fast deletion and that we can guarantee that the elements inside it are in their right order according to the sorting criteria, we would be able to reach the targeted throughput.

Meh, that's a lot for a single data structure...

Well, yeah it's but can you think of such data structure ?

std::vector<Order>

The first DS that we will explore is std::vector<Order>. Our Order is merely a struct that is defined this way:

struct Order{

int price_;

int qty_;

std::chrono::milliseconds time_;

};

Insertion

After every insertion if there is no counter matching resting order for the incoming order, it will be inserted inside its right position among the existing one.

The insertion of the order in its position will require us to sort the vector after every insertion, which will be in a sense quite expensive.

Another possibility is that instead of sorting the whole vector every time, as we know that the previously inserted elements are already sorted we can do binary search insertion.

But because the position we will find to insert the element will be already occupied, it will require us to create a new vector and copy inside it the previous elements in order to be able insert our fresh new order, which will be also quite expensive.

Cancellation/Deletion

For perfoming the cancellation it just resumes itself to a search inside the vector and putting a tombstone at the specific order position, which takes O(n) time in the worst case.

std::map<Price,std::vector<Order>>

With this DS we are able to aggregate orders with the same price inside the same vector. So when you give us a specific price, we are easily able to list out all the orders with that price.

Insertion

The insertion in this case is very simple, because the map subscript operator has this property that if the key does not already exists it'cas created, when we have a new order we call the subscript operator with the price of the order and insert it inside the vector.

using SellBook = std::map<Price, std::vector<Order>, std::less<>>;

class OrderBook {

SellBook sb_;

auto insert(Order&& o) -> void {

sb_[o.price()].push_back( std::move(o) );

}

};

This guarantees us at the same time that the elements are sorted and are in their right position inside the container.

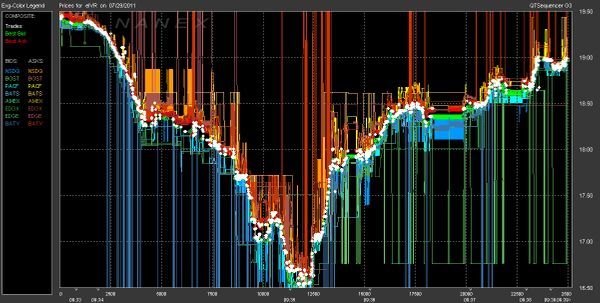

Throwing at the algorithm using this data structure 1 million random orders we get a throughput of 1.1763695 × 10-4s per order.

Cancellation/Deletion

The cancellation here as in the case of std::vector<Order> consist of putting a tombstone on the order after making a search to get it.

The cancellation from my computer tend to have some saturation at 250 micro second, despite some CPU overload that causes some little spikes.

Insertion inside std::vector<Order> vs std::map<>

Comparing the insertion between the two selected data structures, we can see that the cost of resorting the vector after every insertion is very high as expected.

Cancellation inside std::vector<Order> vs std::map<>

Cancellation can be perfomed at a reasonable speed in the two data structures, but we observed a difference when chosing the map data structure instead.

Conclusion

There maybe still some room left for optimizations. We are actively working at Bitwyre to provide you with a fair and fast order execution.

Subscribe and stay tuned because there will be lot of more experiments going on. Untill then.